The Question You're Not Ready For

"Walk me through your institution's governance framework for artificial intelligence and machine learning systems used in financial decision-making, customer data processing, and operational risk management."

Most institutions cannot answer this question.

Not because they don't use AI — they almost certainly do — but because no one established governance over how AI is adopted, deployed, monitored, and controlled.

The examiner follows up:

- "Do you have an inventory of AI systems in use?"

- "How do you validate AI models used in credit decisioning?"

- "What controls prevent AI systems from exposing customer PII?"

- "How do you govern AI embedded in third-party vendor platforms?"

- "What's your process for approving new AI adoption?"

If the answer is "we're working on that" or "we don't think we use AI" — you have ungoverned risk.

And examiners are starting to ask.

Where AI Hides in Financial Institutions

Most institutions underestimate their AI exposure because they think AI adoption requires deliberate deployment of machine learning models.

In reality, you use AI if you have:

Fraud detection and anti-money laundering tools

Most modern fraud platforms use machine learning to detect anomalous transactions, flag suspicious patterns, and generate risk scores. If you can't explain how those models work, how they're trained, or how you validate their accuracy — you have ungoverned model risk.

Credit decisioning and underwriting platforms

Alternative credit scoring models, automated underwriting systems, and lending decisioning tools increasingly use AI. If these systems make or influence credit decisions, they introduce Fair Lending risk, ECOA compliance risk, and model risk management requirements.

Customer service chatbots and virtual assistants

AI-powered chatbots process customer inquiries, handle complaints, and access account data. If you don't govern what data these systems can access, where that data goes, or how responses are generated — you have data governance and consumer protection risk.

Data analytics and business intelligence platforms

Many analytics tools use AI to generate insights, predict trends, and automate reporting. If finance teams use AI-generated analysis for forecasting or decision-making without understanding model limitations — you have operational risk.

Third-party vendor platforms

Your core banking system, payment processor, loan origination platform, or risk management tool likely embeds AI. Your contract probably doesn't require the vendor to disclose AI use, explain how it works, or govern its data handling. When examiners ask how you oversee third-party AI risk — you can't answer.

The common thread:

AI adoption happened without governance oversight — because no one defined what requires governance approval.

Why This Is an Examination Topic Now

AI governance isn't a future regulatory concern. It's a current examination focus — particularly for institutions subject to:

Model Risk Management (SR 11-7)

Federal banking regulators expect institutions to validate models used in financial decision-making. If your fraud detection tool or credit scoring platform uses machine learning — it's a model. If you haven't validated it, you have a model risk management gap.

Fair Lending and ECOA Compliance

If AI influences credit decisions, examiners will ask: How do you prevent bias? How do you validate fair outcomes? How do you explain adverse actions? If you don't govern AI decisioning systems for Fair Lending risk — you have a compliance gap.

Data Privacy and GLBA

If AI systems process customer data, examiners will ask: What data can AI access? Where does that data go? Who controls it? If you use ChatGPT to draft customer communications without data governance controls — you've exposed PII.

Third-Party Risk Management

Examiners expect you to govern vendor risk — including vendor use of AI. If your vendor contracts don't address AI transparency, data handling, or model governance — you have a third-party risk management gap.

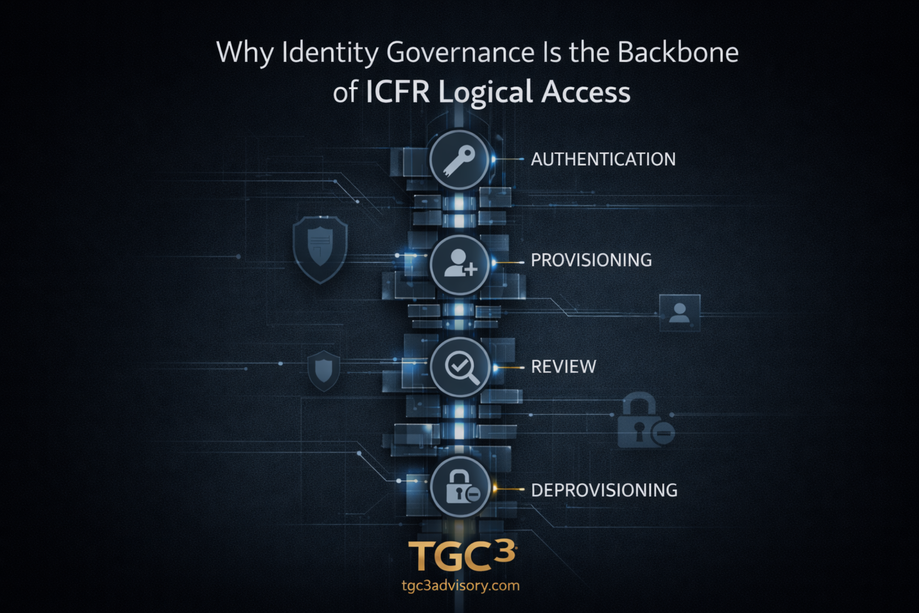

Operational Risk and ICFR If AI automates operational processes, makes decisions, or generates financial data — it introduces control risk. If you haven't assessed whether AI systems maintain segregation of duties, produce audit trails, or operate within approved parameters — you have operational and ICFR risk.

The regulatory expectation is straightforward: If you use AI, you govern it. If you can't govern it, you don't use it.

The Five Governance Failures That Create AI Risk

Failure 1: No AI Inventory

What we see:

- Institution doesn't know what AI tools are in use

- Business units adopt AI-powered SaaS platforms without IT or Risk review

- Vendor AI is invisible (contracts don't require AI disclosure)

- No centralized catalog of AI systems, use cases, or owners

What examiners conclude:You can't govern what you don't know exists.

What defensible looks like:

- Comprehensive AI inventory maintained by Risk or Governance

- Every AI tool cataloged with: owner, use case, data accessed, vendor, risk rating

- New AI adoption requires registration before deployment

- Vendor contracts require disclosure of AI use and updates

Failure 2: No Risk Appetite for AI

What we see:

- No board-level discussion of AI risk tolerance

- No policy defining acceptable vs. prohibited AI use cases

- No criteria for what AI applications require executive approval

- Ad-hoc AI adoption based on individual business unit decisions

What examiners conclude:Management doesn't understand or control AI risk exposure.

What defensible looks like:

- Board-approved AI risk appetite framework

- Policy defining: what AI use cases are acceptable, what's prohibited, what requires escalation

- Clear criteria: AI in credit decisions → executive approval required. AI in marketing → standard vendor review.

- Risk Committee oversight of high-risk AI applications

Failure 3: No Model Risk Management for AI

What we see:

- AI models deployed without validation

- No documentation of how AI systems were trained or what data was used

- No performance monitoring for model drift or accuracy degradation

- No process for identifying when AI qualifies as a "model" requiring validation

What examiners conclude:You treat AI as a black box. You can't explain how it works, validate its accuracy, or ensure it operates as intended.

What defensible looks like:

- Model risk management framework extended to AI systems

- AI models used in credit, fraud, or financial decisions validated before production deployment

- Model documentation: training data, limitations, expected performance, testing results

- Performance monitoring dashboards tracking accuracy, drift, and false positive rates

- Annual model revalidation

Failure 4: No Data Governance for AI

What we see:

- AI tools given broad data access without classification controls

- Customer service teams use ChatGPT to draft responses — exposing PII to external platforms

- No data flow mapping for AI systems (where does data go? who controls it?)

- No vendor data processing agreements addressing AI-specific risks

What examiners conclude:You don't govern what data AI can access, how it's used, or where it's processed.

What defensible looks like:

- Data classification controls: AI systems restricted to data appropriate for their use case

- PII protection policies: AI tools processing PII require vendor data processing agreements, encryption, access controls

- Data flow mapping: Every AI system documented for data inputs, processing location, retention, vendor access

- Vendor contracts include AI data governance terms

Failure 5: No Third-Party AI Oversight

What we see:

- Vendor contracts don't address AI use, model governance, or data handling

- Institution doesn't know which vendors use AI or how

- No process for vendor AI risk assessment during due diligence

- No ongoing monitoring of vendor AI changes or updates

What examiners conclude:You outsource risk but don't govern it.

What defensible looks like:

- Vendor due diligence includes AI disclosure requirements

- Vendor contracts require: AI transparency, model governance documentation, data processing terms, change notification

- Vendor risk assessments include AI-specific risk evaluation

- Ongoing monitoring for vendor AI updates, performance issues, or control changes

What AI Governance Looks Like

Defensible AI governance isn't about prohibiting AI use. It's about governing AI risk the same way you govern any operational or model risk.

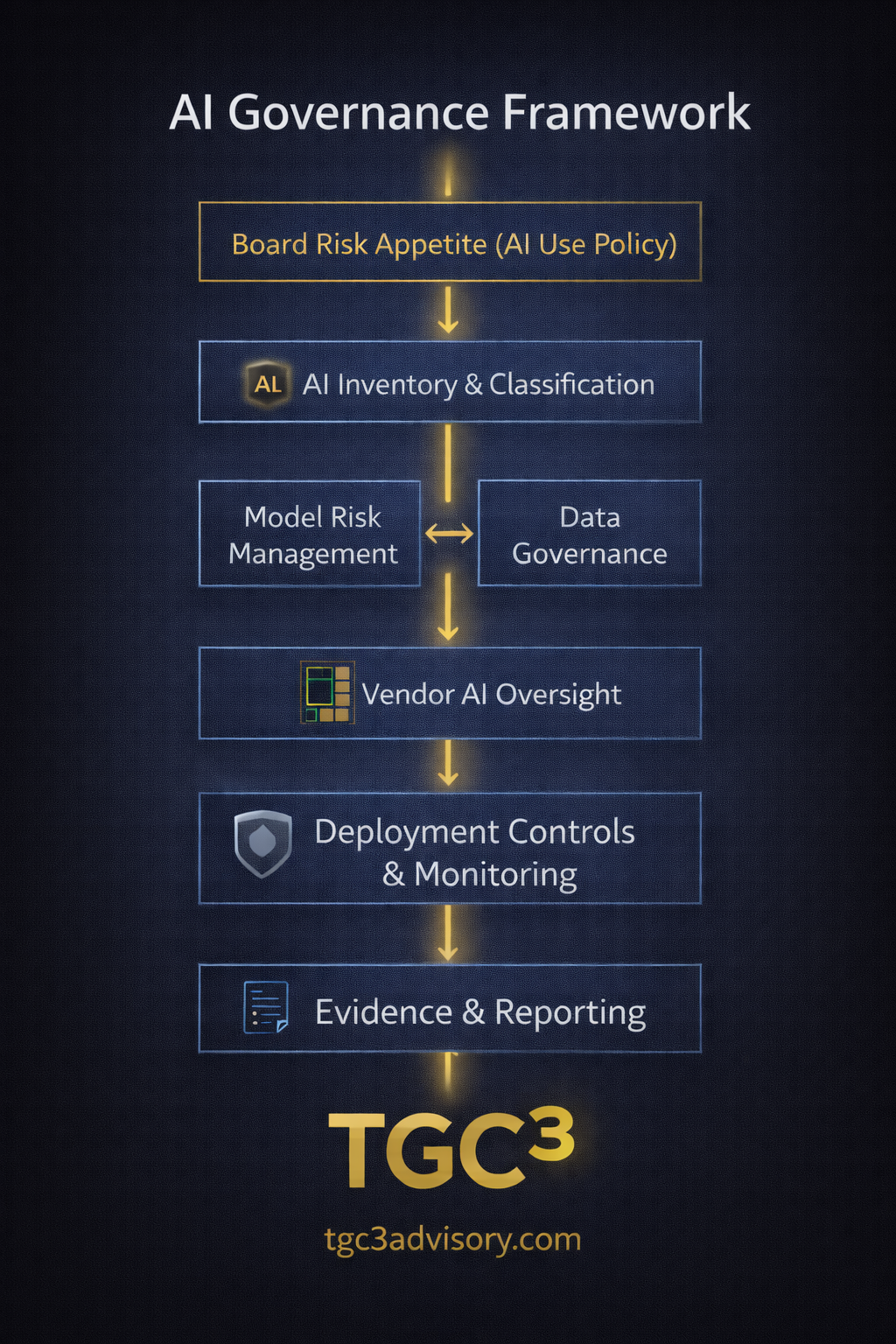

The governance framework examiners expect:

1. AI Risk Appetite & Policy Board-approved framework defining acceptable AI use, prohibited applications, and escalation criteria. Clear criteria for what requires executive or Risk Committee approval.

2. AI Inventory & Use Case Classification Comprehensive catalog of AI tools with ownership, use case, data access, and risk ratings. New AI adoption requires registration and risk assessment before deployment.

3. Model Risk Management for AI AI systems making financial or operational decisions validated before production. Model documentation, performance monitoring, and annual revalidation.

4. Data Governance for AI Data classification controls preventing inappropriate AI access to PII or sensitive data. Vendor data processing agreements for AI platforms. Data flow mapping showing what data AI systems access and where it's processed.

5. Third-Party AI Risk Management Vendor due diligence includes AI disclosure. Contracts require AI transparency, model governance, and change notification. Ongoing monitoring of vendor AI performance and updates.

6. AI Change & Deployment Governance Approval workflows for new AI adoption. Risk Committee review for high-risk AI use cases. Testing and validation before production deployment.

Not aspirational principles. Operational governance mechanisms with evidence loops.

The Window Is Now

AI governance is in the same position identity governance was five years ago: institutions know it matters, but most haven't built the mechanisms.

The difference is examiners are asking about AI now — not waiting for the first major failures.

If your institution uses AI (and it almost certainly does), you have two options:

- Wait until the examiner asks, then explain you haven't established governance yet

- Build AI governance infrastructure now, before it becomes a finding

The institutions that succeed are the ones that design governance during adoption — not after examination failures.

Not sure what AI your institution uses or how to govern it? Our AI Governance Readiness Assessment identifies AI exposure, evaluates current governance posture, and delivers a roadmap to defensible AI oversight.